Everything you need to know about Real mobile testing; pros, cons, and setup. Also, how Mobot enhances real-device mobile testing, ensuring apps perform optimally across various devices and conditions.

1. Introduction

Testing mobile applications can be a complex undertaking, given the diverse array of devices, operating systems, and user conditions. To ensure a high-quality user experience across all platforms, testing on real mobile devices is essential. Unlike simulators and emulators, real devices provide the most accurate insights into how an application behaves in the hands of actual users. This article explores the significance of real mobile device testing and introduces how services like Mobot integrate into these necessary processes to enhance testing accuracy and efficiency.

2. Understanding Mobile Device Diversity

One of the major challenges in mobile development is device fragmentation. In today's market, mobile engineers and QA teams must account for numerous device brands, models, screen sizes, and operating system versions. This diversity can significantly affect an app’s functionality and user experience, making comprehensive testing on real devices not just beneficial, but critical.

For instance, a feature that operates flawlessly on the latest iPhone model might malfunction on an older Android device due to differences in hardware capabilities or operating system functionalities. Consider the varying processor speeds, available RAM, and even graphic rendering differences: these hardware variables can dramatically alter the performance and behavior of mobile applications.

This complexity is compounded by the rapid pace at which new devices and updates are released. A testing strategy that covered all bases last year might be inadequate today. As a result, keeping a mobile testing platform updated with the latest devices is a continuous effort.

This is where mobile testing platforms with real devices, such as Mobot, prove invaluable. They provide access to a current and extensive array of devices, enabling teams to conduct thorough and effective testing that keeps pace with market developments.

By understanding and addressing the challenges posed by mobile device diversity, engineering teams can better ensure their applications perform reliably across all devices, leading to higher user satisfaction and retention.

To illustrate the significance of device diversity and its impact on mobile applications, consider a recent example involving a popular social media app's update. Upon release, users with older Android devices (specifically models using Android OS versions older than 9.0) experienced frequent crashes when trying to upload videos. Investigation revealed that the issue was related to the app's new video compression algorithm, which was optimized for newer hardware specifications that included advanced multi-threading support.

This problem was initially overlooked during the testing phases, primarily because the testing did not sufficiently cover the range of older Android devices still widely in use. As a result, the app's ratings on the Google Play Store dropped significantly until a patch could be issued to resolve the compatibility issue. This case underscores the critical need for inclusive testing strategies that reflect the actual spectrum of devices used by the target audience, reinforcing the value of real mobile device testing platforms that provide access to diverse hardware setups.

3. Key Advantages of Testing on Real Devices

Testing on real mobile devices offers unparalleled advantages that are crucial for delivering a strong mobile application. These benefits stem primarily from the authenticity and precision of the test environments, which simulate real-world user interactions more accurately than any emulator or simulator could.

Accuracy of User Interactions

Real devices provide the exact touch interface, gesture controls, and visual rendering that end-users experience. For example, touch sensitivity varies significantly across devices—what works seamlessly on a high-end smartphone might be less responsive on a budget model. Capturing these nuances during testing ensures that applications are user-friendly and inclusive of the device spectrum in the market.

Additionally, functionalities like pinch-to-zoom, swipe, and tap behave differently across various screen sizes and resolutions. Using real devices in testing phases helps identify and rectify any user interface issues, which might not be fully replicated in simulated environments.

Network Conditions and Their Impact on App Performance

Mobile applications are often used in varying network conditions which can affect app performance and functionality. Testing on real devices allows engineers to assess how network speed, connectivity changes, and even transitions between Wi-Fi and cellular data affect the application’s performance.

An example here would be streaming apps, which need to manage data buffering under different network conditions to maintain a smooth user experience. Testing these apps on real devices situated in different network environments helps identify potential disruptions or performance degradation that users might face.

Sensor-Based Functionalities and Their Complexities

Many modern apps leverage device sensors such as GPS, accelerometers, and gyroscopes. These sensors can behave differently depending on the device's hardware and operating system. For instance, a fitness tracking app relies heavily on the accelerometer and GPS to provide accurate activity tracking. Testing these features on real devices ensures that the app records and processes data accurately across different models and makes.

A mobile testing platform with real devices, like Mobot, provides access to a wide range of devices equipped with various sensors. This access allows teams to conduct thorough testing that covers the full spectrum of user scenarios and interactions, thereby increasing the reliability of the app across all supported devices.

These examples highlight just a few of the many advantages of real mobile device testing. By integrating such testing into the development cycle, teams can significantly enhance the quality and reliability of mobile apps, ensuring they meet user expectations in real-world conditions. Such thorough testing is not just a phase but a fundamental component of successful mobile app development.

4. How Mobot Enhances Real Device Testing

Mobot is a platform that stands out in the domain of real mobile device testing by addressing some of the most persistent challenges faced by mobile QA teams. Here we delve into how Mobot integrates with existing test frameworks and the efficiency improvements it brings, backed by real-world case studies.

Integration with Existing Test Frameworks

Mobot is not designed to replace existing testing frameworks but to enhance them. It seamlessly integrates with popular tools like Appium, XCTest, and Espresso, allowing teams to leverage their existing test scripts while benefiting from Mobot's strong device lab. This integration is straightforward, typically involving the inclusion of Mobot's API into the existing test setup.

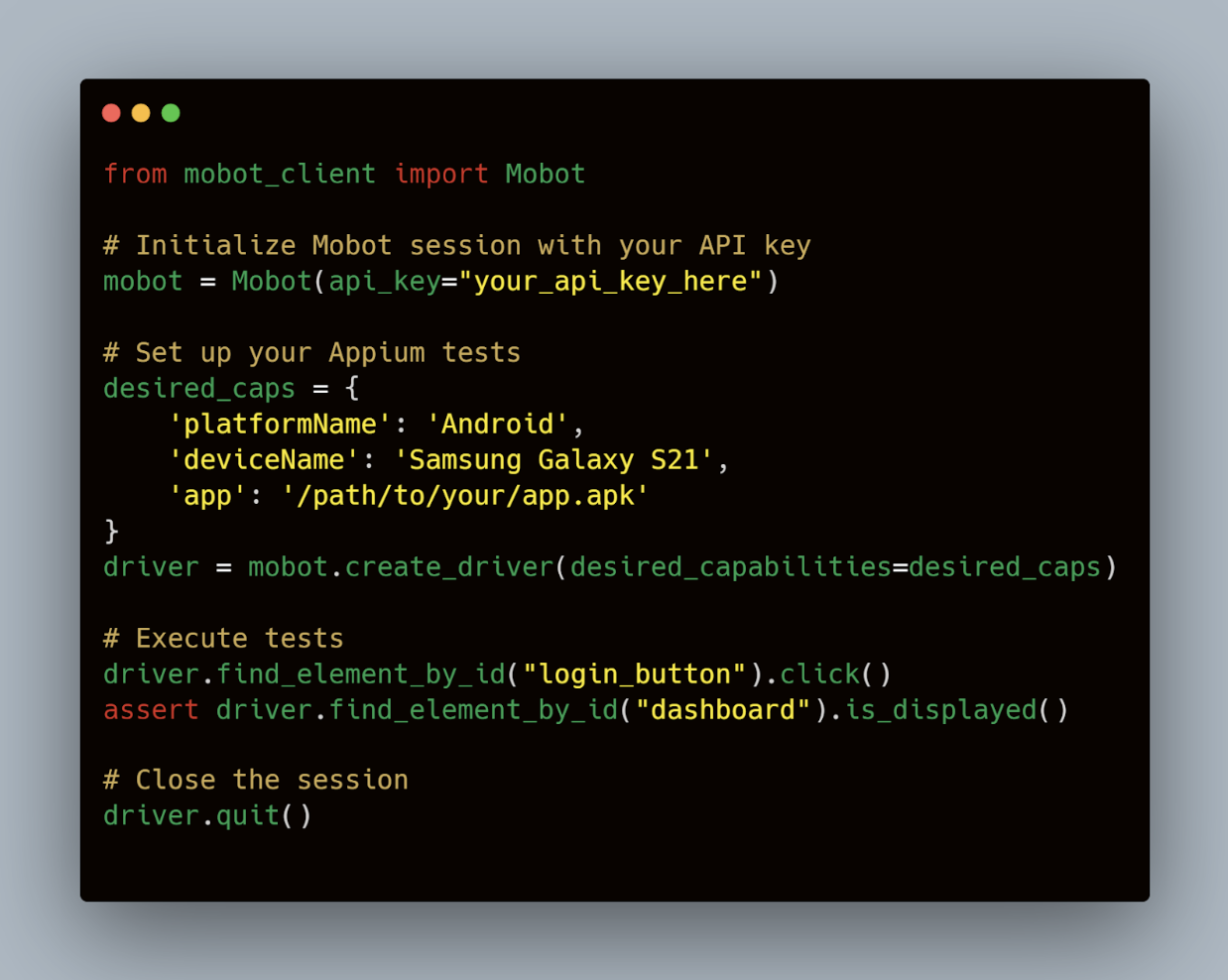

For instance, consider a mobile engineering team using Appium for automated UI testing. By integrating Mobot, they can run these tests on a wide array of real devices hosted remotely. This process might look something like this in code:

Python

This snippet shows how a typical Appium test script can be adapted to use Mobot's platform, targeting specific devices available in its fleet.

Using Real Mechanical Robots

Mobot utilizes real mechanical robots for testing with ultimate accuracy & consistency. Mobot's fleet of mechanical robots use computer vision to execute your test cases, consistently every time. It tests the app on 300+ physical devices like a human would. Your users aren’t on emulators or virtual devices. With Mobot, you can test your native and cross-platform mobile apps the way they will be used in the real world - on real iOS and Android devices.

Automation with the perks of Manual Testing

Mobot helps you eliminate tedious manual testing. Tricky, complicated tests like multi-device messaging, deep linking, push notifications, Bluetooth connections to peripheral devices, and many more. But while doing so, Mobot ensures that its user base enjoys the perks of manual testing. Hence, every Mobot customer is paired with an expert customer success manager who understands your app, and your test plans, and can distinguish between a real bug and a slight design change.

5. Automated vs. Manual Testing on Real Devices

When it comes to mobile device testing, both automated and manual methods have their distinct roles, each with its strengths and limitations. A balanced approach that leverages the benefits of both can dramatically enhance the testing efficiency and product quality.

Comparing Strengths and Weaknesses

Automated Testing is indispensable for its ability to run a large volume of tests repeatedly across multiple devices without human intervention. This is particularly beneficial for regression testing, where the same tests need to be executed frequently to verify that recent changes have not disrupted existing functionalities. For instance, automated scripts can simulate thousands of user interactions, ensuring that the application can handle the expected load and functions correctly under stress.

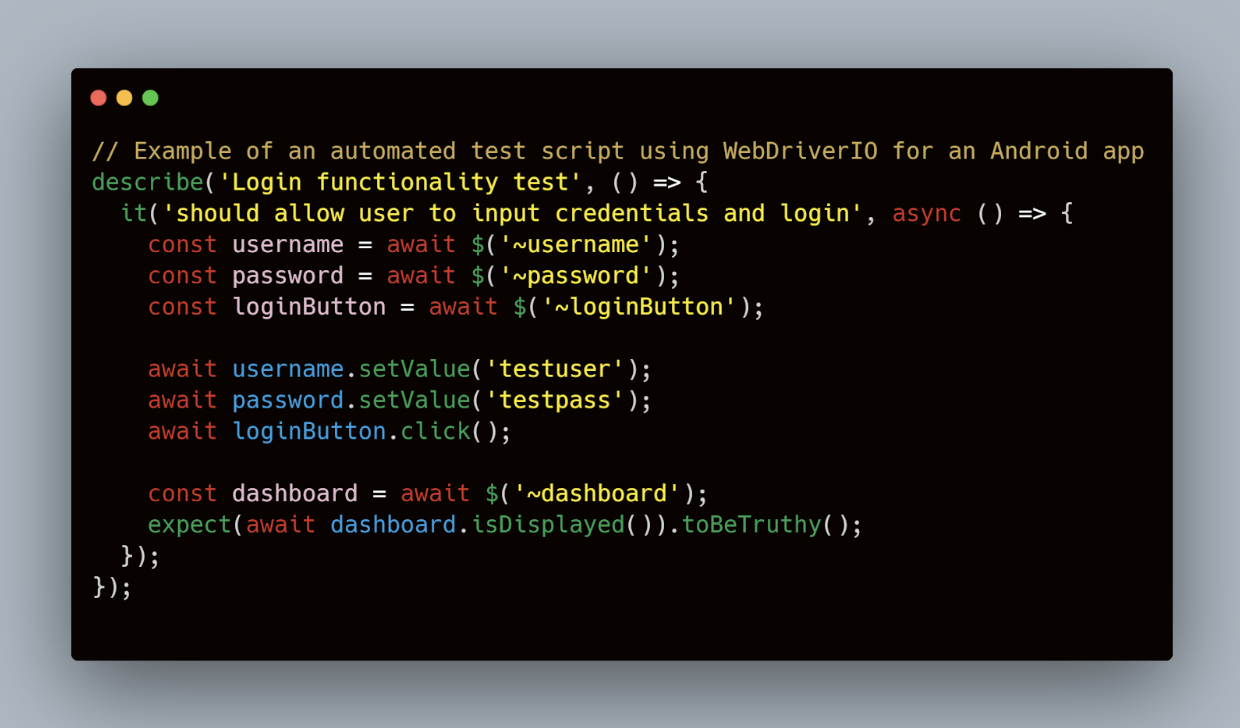

Javascript

Manual Testing, on the other hand, is crucial for evaluating user experience elements such as look and feel usability and overall intuitiveness of the application. It allows testers to provide subjective feedback, which is vital for apps where user satisfaction is a priority. For example, manual testing is essential when assessing a new user interface to ensure that elements are not only functionally correct but are also aesthetically pleasing and easy to navigate.

Scenarios Where Manual Testing is Indispensable

While automated testing offers speed and repeatability, there are scenarios where manual testing is more effective:

- Exploratory Testing: This is a hands-on approach where testers explore the software to identify unexpected behaviors or potential improvements. Exploratory testing relies heavily on the tester's experience, intuition, and creativity.

- Real User Simulation: Manual testing is better at simulating real user behaviors, such as gestures on a mobile device, which might be overlooked by automated scripts.

- Ad-hoc Use Cases: Certain test cases are too complex or too specific to set up with automation scripts due to their need for nuanced human input or decision-making during execution.

Both testing methods contribute uniquely to a comprehensive testing strategy. Automated testing excels in ensuring basic functionalities work as expected across updates, while manual testing offers insights into user-centric aspects of the application that automated tests might miss.

In integrating tools like Mobot into their testing processes, teams can harness the best of both worlds. Mobot provides a mobile testing platform with real devices, facilitating automated test execution while also supporting manual testing efforts. This dual capability ensures that mobile apps are not only strong in their performance but also deliver superior user experiences tailored to meet real-world needs.

6. Setting Up a Real Device Test Environment

Setting up a strong testing environment using real devices is crucial for mobile QA teams to ensure their applications function correctly under various conditions and on different devices. This section covers essential considerations and a practical setup example using popular mobile testing tools to help teams effectively test on real devices.

Choosing Devices for a Test Suite

The selection of devices for a test suite should reflect the target demographic's most commonly used devices as well as those that represent the extremes of performance capabilities and operating systems. This selection often includes:

- High-End Phones: To ensure the app performs well under optimal conditions.

- Mid-Range Phones: These are often the most popular and represent the average user's experience.

- Older Models: To guarantee backward compatibility and functionality for users who have not upgraded their devices.

For example, if a significant portion of your user base is in emerging markets, including lower-end devices with limited RAM and older operating systems is essential. Tools like Google Analytics can help determine what devices are most popular among your users.

Handling Device-Specific Software and Hardware Issues

Different devices come with unique challenges, such as varying screen resolutions, hardware capabilities, and even OEM-specific modifications to operating systems. To manage these effectively:

- Use Device Metrics Databases: Services like GSMArena provide detailed specifications for hundreds of devices which can help in understanding the limitations and features of different models.

- Device Rotation: Regularly update the device pool to include new releases and retire older, less popular devices.

- Hardware Checks: Regularly test hardware-specific features like cameras, sensors, and battery life under different conditions.

Example Setup Using Popular Mobile Testing Tools

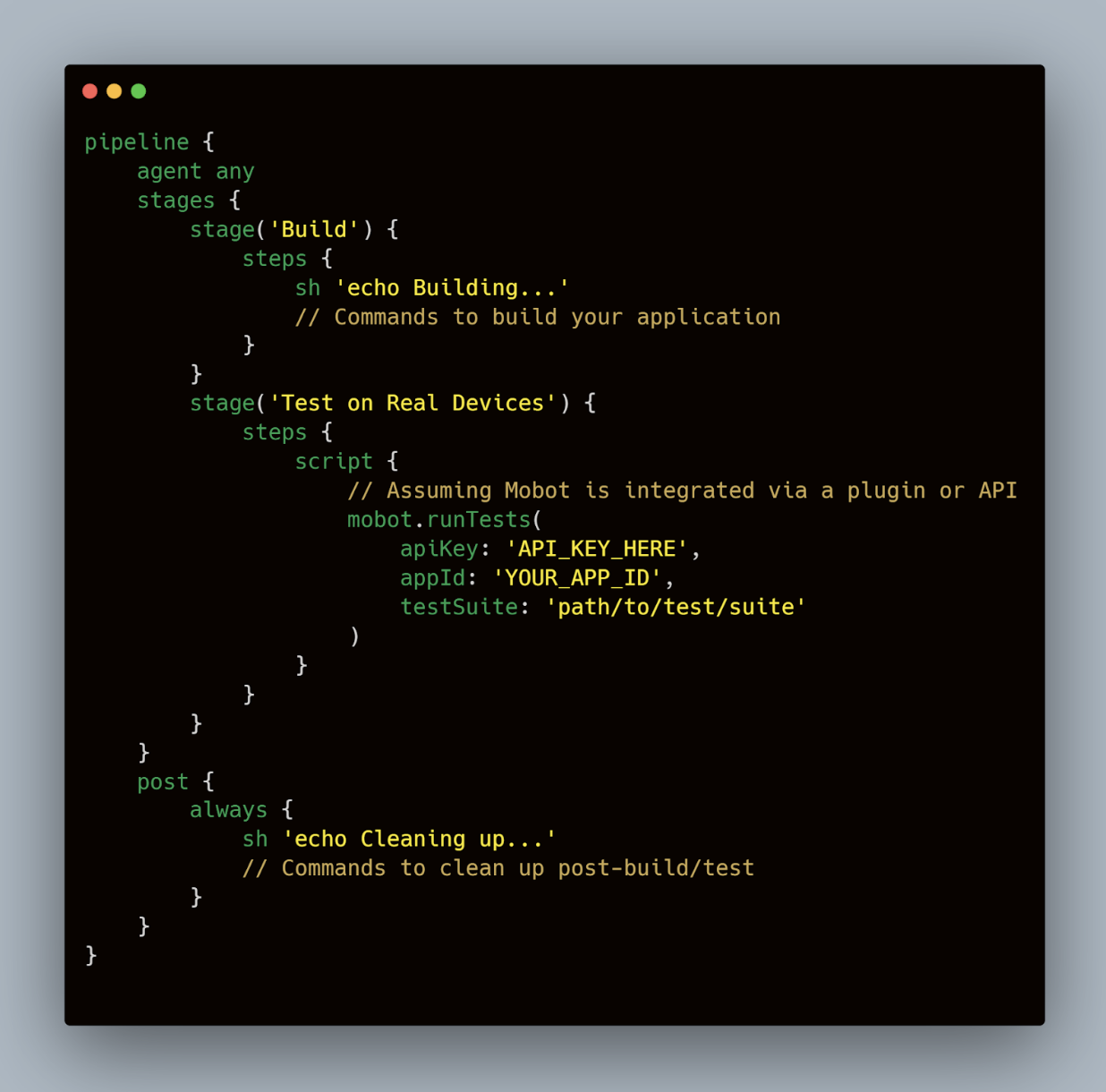

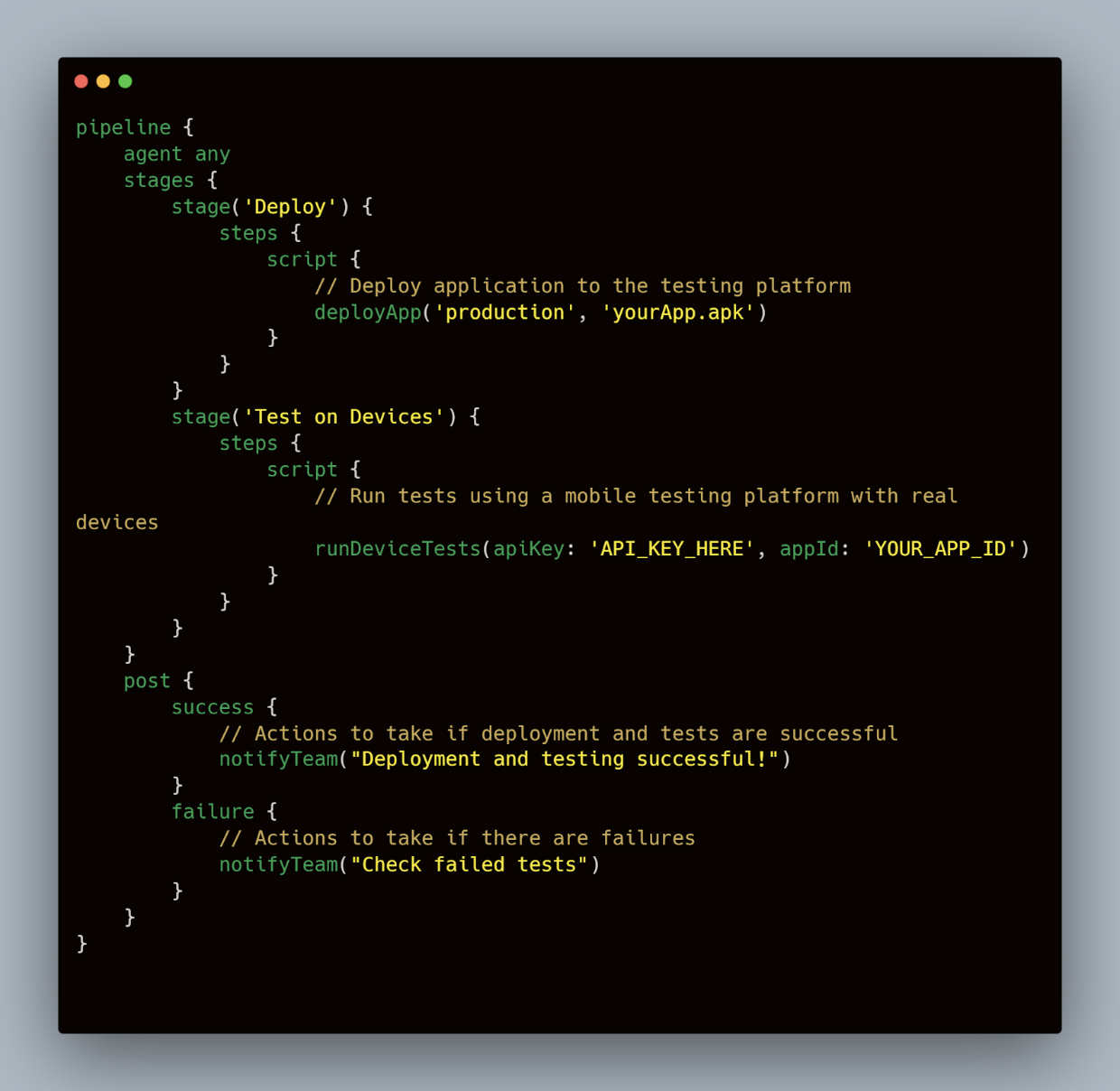

Integrating a mobile testing platform with real devices into your CI/CD pipeline can streamline the setup. Here’s an example of how you might configure a Jenkins pipeline to use a real device testing platform like Mobot:

groovy

This script demonstrates a basic Jenkins pipeline where the application is built, and then tests are run on real devices using Mobot. The mobot.runTests method takes parameters such as an API key, application ID, and the path to the test suite, simplifying the process of executing tests across a diverse device pool.

7. Best Practices for Real Device Mobile Testing

Establishing a set of best practices is vital for optimizing the efficiency and effectiveness of mobile testing strategies, especially when dealing with the complexities of real device testing. Here, we outline key methodologies that enhance test coverage and ensure strong integration into continuous deployment cycles.

Test Planning and Management

Effective test planning is the cornerstone of any successful mobile testing initiative. It involves defining what to test, how to test it, and when to test it. For mobile apps, this means:

- Creating a Device Matrix: Identify a representative sample of devices that encompasses different manufacturers, screen sizes, operating systems, and hardware capabilities. This ensures that tests cover the broadest possible range of user scenarios.

- Prioritizing Test Scenarios: Focus on critical areas such as user authentication, payment gateways, or any features directly impacting the core functionality of the app.

- Version Control for Test Scripts: Maintain and manage test scripts with version control systems like Git to ensure that changes are tracked and reversible, facilitating collaborative work among QA teams.

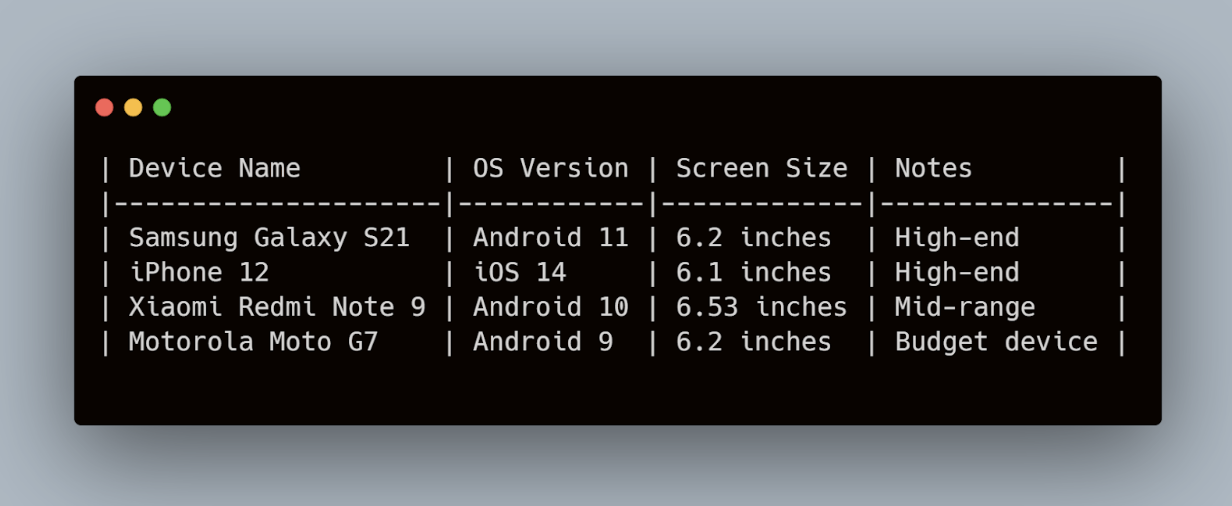

An example of a simple device matrix structure might be a table listing device names, OS versions, screen sizes, and any particular notes relevant to testing:

plaintext

Data-Driven Approaches to Enhance Test Coverage

Leveraging data to guide testing efforts can significantly improve the effectiveness of the testing process. This involves analyzing usage statistics to identify the most commonly used features and incorporating user feedback to pinpoint areas that may require more intensive testing. Tools like Google Analytics or Firebase can provide invaluable insights into how users interact with the app in real-world scenarios.

Examples of using data to prioritize testing might include analyzing crash report data to focus on stabilizing features that cause the most frequent failures.

Continuous Integration and Deployment Strategies

Integrating testing into the continuous integration/continuous deployment (CI/CD) pipeline is essential for agile development environments. This approach allows teams to detect issues early and iterate quickly on feedback.

- Automate Deployment for Testing: Use tools like Jenkins, CircleCI, or GitLab CI to automate the deployment of builds to test environments.

- Real-Time Test Monitoring: Implement dashboards that provide real-time insights into the testing process and highlight failures as they occur.

An example of a Jenkins pipeline script that integrates with a real device testing platform might look like this:

groovy

These best practices are not only about executing tests but also about ensuring that every aspect of the application is scrutinized under conditions that closely mimic the real world. Through careful planning, data-driven insights, and seamless integration into development workflows, mobile engineering teams can ensure their applications are strong and user-friendly.

8. Challenges and Solutions in Real Device Testing

Testing on real devices, while critical for mobile app validation, brings its own set of challenges. Here, we explore common pitfalls in real device testing and provide practical solutions to help engineering teams navigate these obstacles effectively.

Common Pitfalls

Device Availability and Management: One of the main hurdles in real device testing is ensuring the availability of the necessary device types, particularly for teams without access to extensive device labs. This issue is compounded when tests require specific devices or those that are in high demand due to recent releases.

Environmental Variability: Unlike controlled lab conditions, real device testing must account for a variety of real-world conditions, such as different network speeds, background app interference, and battery life status, all of which can affect app performance unpredictably.

Flakiness in Test Execution: Tests running on real devices can sometimes be flaky—showing different outcomes for the same test run without any changes in the code. This can be due to transient network issues, performance variations, or UI changes that haven't been accounted for in the test scripts.

Practical Solutions

To address these challenges, here are some strategies that teams can implement:

1. Leveraging Device Clouds: Utilizing a mobile testing platform with real devices, such as Mobot, can solve the issue of device availability. These platforms provide access to a diverse range of devices over the cloud, making it easy to manage and scale testing efforts without the overhead of maintaining a physical device lab.

2. Standardizing Test Environments: To minimize environmental variability, standardize the testing environment as much as possible. This includes setting network conditions, disabling unnecessary background apps, and controlling battery settings during tests.

3. Enhancing Test Reliability: To reduce flakiness, ensure that test scripts are strong and account for possible UI changes. Implement retry mechanisms in test scripts and use explicit waits rather than fixed sleep to handle variability in device performance and response times.

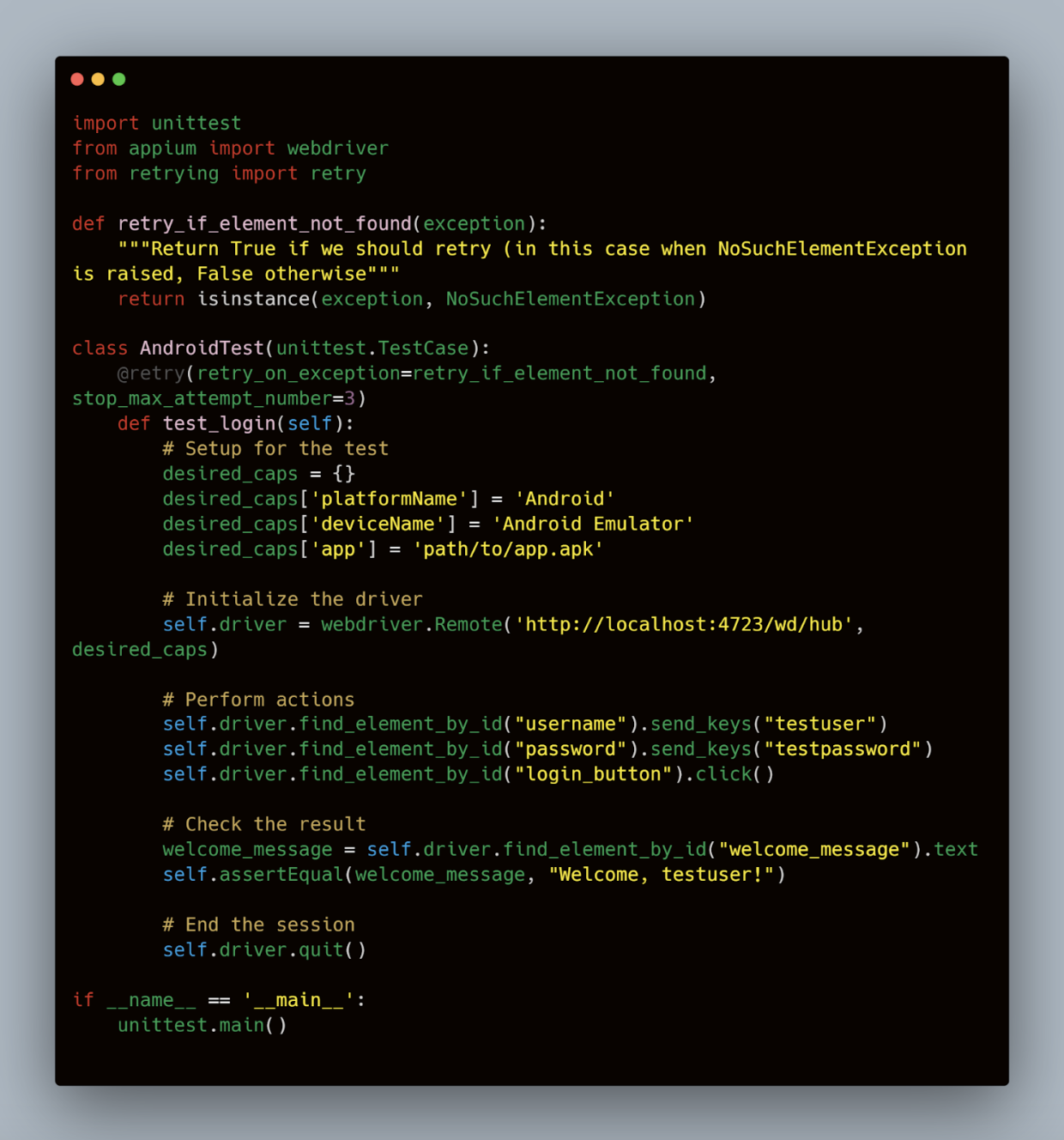

Example of a Test Script with Retry Mechanism:

Python

This example uses the Python retrying library to retry the login test up to three times if it fails due to an element not being found, addressing common issues of flakiness in mobile UI tests.

.jpg)

.jpg)